Micro Cook

Micro Cook

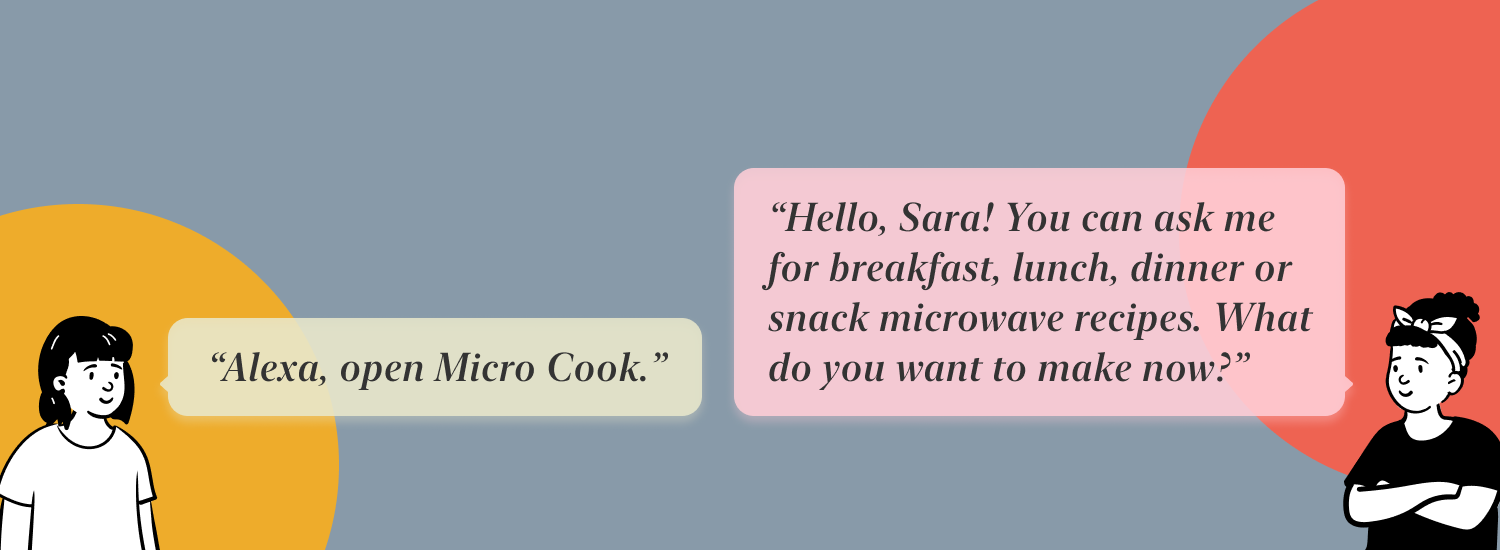

: an Alexa skill designed to help navigate diverse microwave recipes through voice assistant.

Role

VUI Designer, Conversational Designer

Process

Personas, User Stories, Scripts & Flows, User testing

Scope

1 month, Apr 2021 - May 2021

Tools

Alexa Developer Console, Lucidchart, Paper & Pencil

How has Coronavirus shaped consumers’ food habits?

As people adjust to home confinement, consumers have settled into new routines that involve a lot more home cooking, and studies suggest that their cooking habits will carry on even after the pandemic. According to a study conducted by OnePoll, 55% of the 2,000 surveyed consumers expressed their tiredness towards cooking when working from home.

The common goals among those surveyed are:

Come up with new recipe ideas

Save time in the kitchen

Spend less time on meal preparation

While 43% of respondents want to spend less time planning out their meals, 40% of all participants listed the biggest challenge they encountered was planning different meals every day.

With these changes continue to ferment among the society, how can we help people make better, quicker and easier choices when it comes to home cooking?

1. Understand Users and System

To better address the targeted users when fleshing out user stories for voice scripts, I spent some time investigating the main pain points by conducting three short user interviews. It helped immerse myself in their mental models and shaped a user persona, a system persona when keeping their placeona in mind.

Will users feel comfortable executing a voice task in expected environments?

After having a clear figure on who I’m designing for and how the system represents the general branding, I then moved on to write user stories. At this phase, my main goal is to set the context, identify a solution, and explain the user need. In addition, making sure if the physical context is good for voice interactions is something to consider prior to writing the stories.

Here’s my basic reference point for how users behave when making dinner at home by using the skill:

Hands: Busy

Eyes: Mostly busy

Ears: Free

Voice: Free

2. User Flows & Voice Scripts

What is the logic of the general path between human and voice assistant?

When designing flows for GUI, we consider more from the perspective of each individual page, however, the flows in VUI have a different function - they mean to show what a system can do as well as how a system will respond to various inputs - as we called them intents (a distinct functionality or task the user can accomplish).

I wrote down a few sample dialogues to help me move forward with designing user flow. The following intents capture all the initial core functionalities:

Choose Type Intent

Instructions Intent

Filter Intent

Ingredients Intent

Save Intent

Next Step Intent

Sort Intent

And here’s a demonstration of how the flow for Choose Type Intent looks like:

To move the voice experience from low-fidelity to high-fidelity, I moved on to apply some best dialogue best practices when developing voice scripts.

Echo User Information

When users are talking to voice assistants, they don’t have non-verbal or visual cues to clarify understanding as in human conversation or GUI, so I included the confirmation of their response in reply after giving them a prompt.

Avoid Rhetorical Questions

Users can get confused with two prompted questions, first as a yes/no question, following by an optional choice. To avoid users to barge-in before the system finishes the sentence, I rephrased the sentence to help them focus on the task at hand.

*Feel free to visit here to see the full voice script.

3. User Testing

Do the users find the experience smooth enough? Do they expect extra support apart from the voice?

With the finished scripts, I conducted usability test sessions with 5 users with both novice or expert experience levels among them. In the usability test plan, I included 6 scenario tasks in the format of Wizard of Oz where I played the part of Alexa. The tests sought to assess the following research questions:

Can participants easily search and select recipes without extra help?

Do participants find the process smooth and enjoyable?

Do participants prefer specific instructions during meal preparation?

The final findings in the test report revealed three prominent issues which I rated with Jakob Nielsen‘s severity scoring system and additional feasibility to prioritise the development.

Error #1: Users were restricted due to the lack of knowledge on what the skill can do.

4/5 users skipped the chance of asking about recipes using certain ingredients because they were not confident about what would happen as result and afraid of hearing a fallback message.

Suggested changes: During the first few times of the flow, use more complete instructions to explain what the skill can do in each phase.

Intro - “Welcome to Micro Cook! You can ask me for breakfast, lunch, dinner and snack recipes, or ask me for recipes with or without certain ingredients. Would you like to hear some lunch recipes now?”

Choose Type - “I have three recipes, or you can also ask for more. Which {mealType} would you prefer, {Recipe_1}, {Recipe_2} or {Recipe_3}?

Error #2: Fall back messages confused users and couldn't help them recover from errors.

3/5 users found the fallback messages confusing because the original suggestion of telling users to go back to a certain step didn’t help them get out of the loop. When reaching the system limits on providing recipes, they prefer receiving more support on selecting a recipe.

Suggested changes: Instead of asking users to go back to the last step, provide additional support to help them move on to the next step.

“You've reached my system limit. Each day you have 6 recipes for each course. Would you like me to repeat the first three {basedOnContextMealType} recipes of today?”

Error #3: The step-to-step instructions were impossible to follow.

3/5 users couldn’t follow along with the instructions because they were simply too long, especially when the system was listing ingredients, they expected to have the list sent to the phone for better understanding.

Suggested changes: Make sure the length of a step is not longer than once sentence. (around 150 words)

What I’ve Learned

Write for the ear, not the eye.

Building an Alexa skill is a refreshing experience, unlike GUI, it involves different perspectives to look into how humans interact with the machine. To better understand how users respond and receive information without the help of visual elements, I constantly remind myself during the whole process to position myself under different scenarios.

Balance brevity with friendliness.

The uncanny valley is a great example of how humans react to nearly real machines, and VUI helps me understand the importance of incorporating “personality” into voice to engage the users. The balance between what users expect from a voice assistant and how they actually respond to the system’s friendliness is a topic worth studying.

Where to Improve

Assumptions on Verbal Habits

When writing the sample dialogues, it’s hard to not make assumptions on how users respond to the machine in the way I used. Even though I conducted a small preference test via a table read, it still produced unnatural mistakes in the voice scripts. After a few rounds of usability tests, I would always remember it’s more important to sound correct than it is for it to look like proper writing.

VUI & GUI

Now with the help of Alexa Show, recipe reading becomes easier to follow, but still has much space to be improved. Taking the advice from one of the users into account, integrating the possibility of emerging parts of the flows with visual elements can make the experience more complete, such as sending ingredients list to the phone, showing timers on the screen or displaying extra information to accelerate the selection.